The tools used to make this site

As this site is primarily a learning exercise in publishing websites with CI/CD systems and any content on it is an afterthought, this is the obligatory post about how these words made it from my keyboard to your screen.

tl;dr;

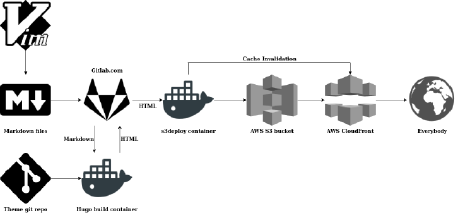

This site uses the Hugo static site generator with a lightly customised version of the Coder theme. I write content in Markdown files and store them here in GitLab. When changes are committed, a CI/CD pipeline generates the HTML files and publishes the final output to AWS S3. A CloudFront CDN reads from that S3 bucket and sends it to you.

Generating the pages

GitLab’s CI/CD system uses Docker images for each step that it takes, so the tools needed to build the site need to be packaged up into Docker images. That means Hugo and s3deploy.

For Hugo, I could use GitLab’s own Hugo images intended for use with their GitLab Pages setup, but as this is a learning exercise, and also because I wanted to use the extended variant of Hugo with more features enabled, I built my own based on GitLab’s. It uses the same updating system to poll for new versions of Hugo each night and push a change to trigger the build of a new package. The same Docker image is used locally for previewing changes while writing on my laptop.

s3deploy is written by bep, the creator of Hugo, and was written specifically to take the output generated by Hugo and push it to an AWS S3 bucket. There didn’t seem to be any regularly updated Docker images for it, so I made one. Since building this, it has become possible for Hugo to upload directly to an S3 bucket, but it can’t do the CloudFront cache invalidation which s3deploy does, so s3deploy is still useful.

Serving the site

GitLab CI runs s3deploy which pushes changes to an S3 bucket - and I could have stopped there. It is possible to serve a website straight out of an S3 bucket, and I have checked that it works with this site. However you can’t use TLS with an S3 hosted website and HTTPS is pretty much a hard requirement these days, even for a personal blog site.

So I have AWS CloudFront in front of the S3 bucket to do TLS offloading. The fact that it is also a very good CDN is an added benefit, but not something I’m likely to need for a personal website.

There are two ways of configuring CloudFront to use S3 as an origin to get content from - either using S3 in its website mode and making HTTP requests to it like CloudFront would when being a CDN for any site hosted outside of AWS, or using the S3 API to request objects. It doesn’t really matter for a public website, but publicly readable S3 buckets are not considered best practice, so I went with the API approach.

The downside of reading from the S3 API rather than an S3 website is that index

documents aren’t handled automatically, i.e. it doesn’t know that

https://plett.uk/about/ should really be https://plett.uk/about/index.html.

To solve this, I am running a Lambda@Edge function which gets called whenever

CloudFront requests an object from S3. It checks the path of the object being

requested from S3 and, if it ends with a /, adds index.html to it.

I also have a Lambda@Edge script to add some HTTP headers on the response back from the S3 bucket to enable HSTS, set a Content Security Policy and some other security headers, which all combine to give a good score on the Qualys SSL Report.

Workflow and writing the content

The master branch of the git repo is continously deployable. Commits to

it trigger the CI/CD pipeline to make changes live, so only finished content

gets put there, normally via a merge from a different git branch.

I typically have several incomplete articles in progress at any one time, so I

keep each article in its own branch and work on them locally on my laptop using

the same docker image that the GitLab CI runner uses, so I have a

reasonable level of confidence that the published site will look the same as my

local copy. Once I’m happy with the output, I merge it into the master branch.

For changes which might affect the ability to build the site, such as upgrading

to a newer version of Hugo or applying tweaks to the theme, I have a staging

branch which has its own GitLab CI config, S3 bucket and CloudFront CDN which

are all as similar as possible to the main site. I use this to check that it

still renders okay on multiple clients.

The staging site has another Lambda@Edge script on it which requires HTTP Basic Authentication to view it. That’s not for any security reasons - just that it is configured to render unfinished draft articles which don’t appear in the main site, so I wanted to stop it accidentally being crawled.

Costs

I could use a service like Netlify or GitHub Pages and have all of this hosted for zero cost, but this is a learning exercise so understanding all the moving parts is a requirement, and the costs for it are exceedingly small.

I’m using the GitLab.com SaaS Free Tier which gives me unlimited repositories and 2000 minutes of CI build time for free each month. A full build of the website and a sync of any changes to S3 takes between 60 and 90 seconds, meaning I can push changes to the website at least 50 times every day for a month before running into any limits. And, if I do, it is possible to self-host your own CI runner, or even your own GitLab instance.

For the AWS costs, having plett.uk in Route 53 costs me US$ 0.50 per month. My

Lambda@Edge usage is low enough to fit well inside the free tier limits, and the

S3 and CloudFront utilisation is low enough that it comes out at $0.00 each

month. If I actually write some content that people care about reading then

those figures might go up, but for now the total cost is $0.50 per month.